The maximum sequence length is determined by finding the length of the longest sequence. The pad_sequences function is used to pad the sequences to the maximum length. Next, we define the model architecture:

model = keras.Sequential([

keras.layers.Embedding(len(tokenizer.word_index) + 1, \

16, input_length=max_sequence_length),

keras.layers.Flatten(),

keras.layers.Dense(1, activation=’sigmoid’)

])

A sequential model is created using the Sequential class from Keras. The model consists of an embedding layer, a flatten layer, and a dense layer. The embedding layer converts the tokens into dense vectors. The flatten layer flattens the input for the subsequent dense layer. The dense layer is used for binary classification with sigmoid activation. Now, we need to compile the model:

model.compile(optimizer=’adam’, loss=’binary_crossentropy’, \

metrics=[‘accuracy’])

The model is compiled with the Adam optimizer, binary cross-entropy loss, and accuracy as the metric. Now, we train the model:

model.fit(padded_sequences, np.array(labels), epochs=10)

The model is trained on the padded sequences and corresponding labels for a specified number of epochs. Next, we classify a new sentence:

new_sentence = [“This movie is good”]

new_sequence = tokenizer.texts_to_sequences(new_sentence)

padded_new_sequence = pad_sequences(new_sequence, \

maxlen=max_sequence_length)

raw_prediction = model.predict(padded_new_sequence)

print(“raw_prediction:”,raw_prediction)

prediction = (raw_prediction > 0.5).astype(‘int32’)

print(“prediction:”,prediction)

A new sentence is provided for classification. The sentence is converted to a sequence of tokens using the tokenizer. The sequence is padded to match the maximum sequence length used during training. The model predicts the sentiment class for the new sentence. Finally, we print the predicted label:

if prediction[0][0] == 1:

print(“Positive”)

else:

print(“Negative”)

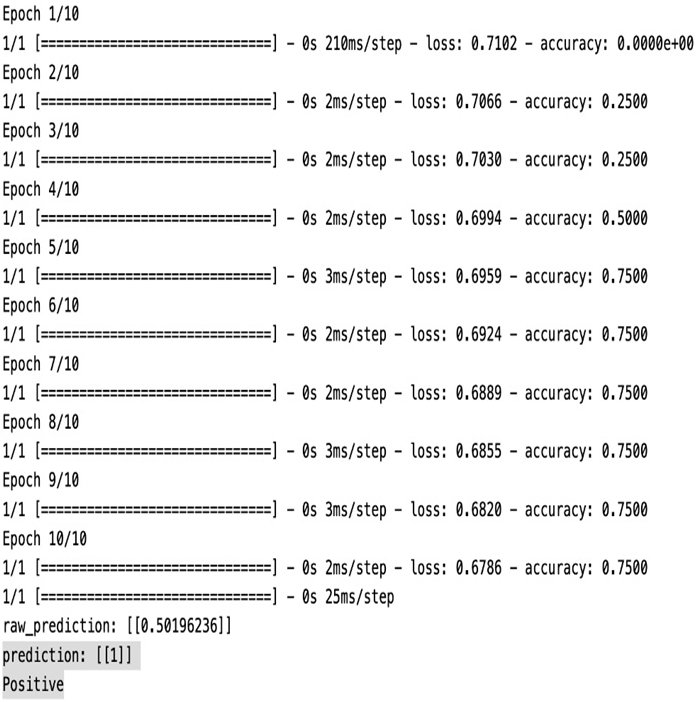

Here’s the output:

Figure 7.11 – Prediction with a neural network model

The predicted label is printed based on the prediction output. If the predicted label is 1, it is considered a positive sentiment, and if it is 0, it is considered a negative sentiment. In summary, the provided code demonstrates a sentiment analysis task using a neural network model.

Summary

In this chapter, we delved into the realm of text data exploration using Python, gaining a comprehensive understanding of harnessing Generative AI and OpenAI models for effective text data labeling. Through code examples, we explored diverse text data labeling tasks, including classification, summarization, and sentiment analysis.

We then extended our knowledge by exploring Snorkel labeling functions, allowing us to label text data with enhanced flexibility. Additionally, we delved into the application of K-means clustering for labeling text data and concluded by discovering how to label customer reviews using neural networks.

With these acquired skills, you now possess the tools to unlock the full potential of your text data, extracting valuable insights for various applications. The next chapter awaits, where we will shift our focus to video data exploration, exploring different methods to gain insights from this dynamic data type.