K-means clustering is a powerful unsupervised machine learning technique used for grouping similar data points into clusters. In the context of text data, K-means clustering can be employed to predict labels or categories for the given text based on their similarity. The provided code showcases how to utilize K-Means clustering to predict labels for movie reviews, breaking down the process into several key steps.

Step 1: Importing libraries and downloading data.

The following code begins by importing essential libraries such as scikit-learn and NLTK. It then downloads the necessary NLTK data, including the movie reviews dataset:

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.cluster import KMeans

from nltk.corpus import movie_reviews

from nltk.corpus import stopwords

from nltk.stem import WordNetLemmatizer

import nltk

import re

# Download the necessary NLTK data

nltk.download(‘movie_reviews’)

nltk.download(‘stopwords’)

nltk.download(‘wordnet’)

Step 2: Retrieving and preprocessing movie reviews.

Retrieve movie reviews from the NLTK dataset and preprocess them. This involves lemmatization, removal of stop words, and converting text to lowercase:

# Get the reviews

reviews = [movie_reviews.raw(fileid) for fileid in movie_reviews.fileids()]

# Preprocess the text

stop_words = set(stopwords.words(‘english’))

lemmatizer = WordNetLemmatizer()

reviews = [‘ ‘.join(lemmatizer.lemmatize(word) for word in re.sub(‘[^a-zA-Z]’, ‘ ‘, review).lower().split() if word not in stop_words) for review in reviews]

Step 3: Creating the TF-IDF vectorizer and transforming data.

Create a TF-IDF vectorizer to convert the preprocessed reviews into numerical features. This step is crucial for preparing the data for clustering:

# Create a TF-IDF vectorizer

vectorizer = TfidfVectorizer()

# Transform the reviews into TF-IDF features

X_tfidf = vectorizer.fit_transform(reviews)

Step 4: Applying K-means clustering.

Apply K-means clustering to the TF-IDF features, specifying the number of clusters. In this case, the code sets n_clusters=3:

# Cluster the reviews using K-means

kmeans = KMeans(n_clusters=3).fit(X_tfidf)

Step 5: Labeling and testing with custom sentences.

Define labels for the clusters and test the K-means classifier with custom sentences. The code preprocesses the sentences, transforms them into TF-IDF features, predicts the cluster, and assigns a label based on the predefined cluster labels:

# Define the labels for the clusters

cluster_labels = {0: “positive”, 1: “negative”, 2: “neutral”}

# Test the classifier with custom sentences

custom_sentences = [“I loved the movie and Best movie I have seen this year.”,

“The movie was terrible.

The plot was non-existent and the acting was subpar.”,

“I have mixed feelings about the movie.it is partly good and partly not good.”]

for sentence in custom_sentences:

# Preprocess the sentence

sentence = ‘ ‘.join(lemmatizer.lemmatize(word) for word in re.sub(‘[^a-zA-Z]’, ‘ ‘, sentence).lower().split() if word not in stop_words)

# Transform the sentence into TF-IDF features

features = vectorizer.transform([sentence])

# Predict the cluster of the sentence

cluster = kmeans.predict(features)

# Get the label for the cluster

label = cluster_labels[cluster[0]]

print(f”Sentence: {sentence}\nLabel: {label}\n”)

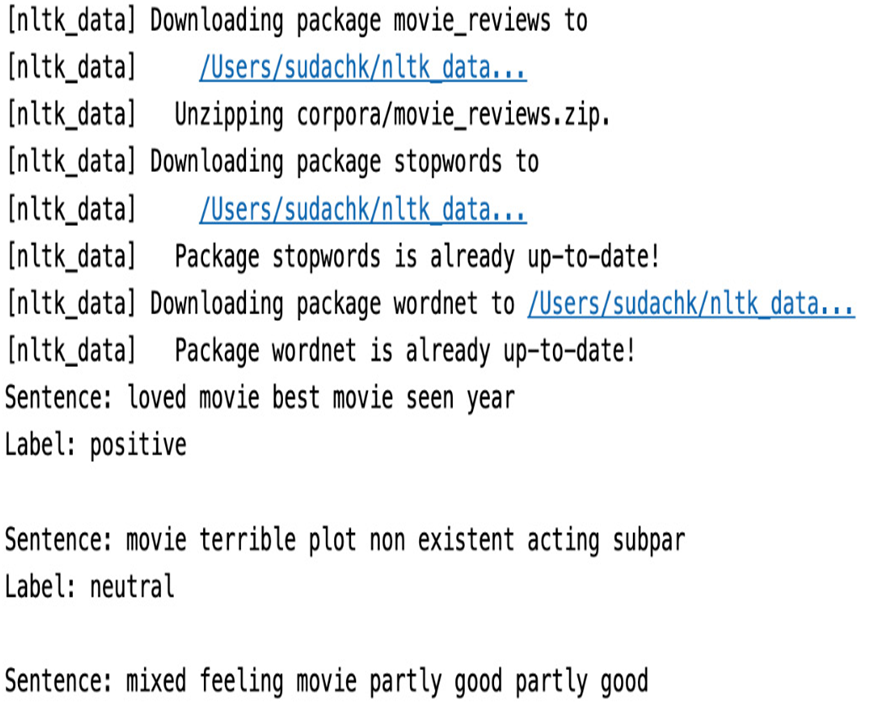

Here’s the output:

Figure 7.10 – K-means clustering for text

This code demonstrates a comprehensive process of utilizing K-means clustering for text label prediction, covering data preprocessing, feature extraction, clustering, and testing with custom sentences.